Issue 43 - The Concurrent and Distributed Structure of Distributed Async Await

A Formal Model of the Concurrent and Distributed Structure of Distributed Async Await

Another weekend, another weekend read, this time all about The Concurrent and Distributed Structure of Distributed Async Await

In traditional async-await, everything happens on a single node, but what if you could extend this model to distribute tasks across nodes while preserving the same familiar structure?

At Resonate, we are working on Distributed Async Await, an extension of async-await where concurrency and distribution are first class citizens. So in this issue of The Weekend Read, we will discuss the distributed call graph.

The Concurrent Structure

Async-await results in an async call graph: The async call graph represents the flow of asynchronous executions and captures the dependencies between asynchronous executions.

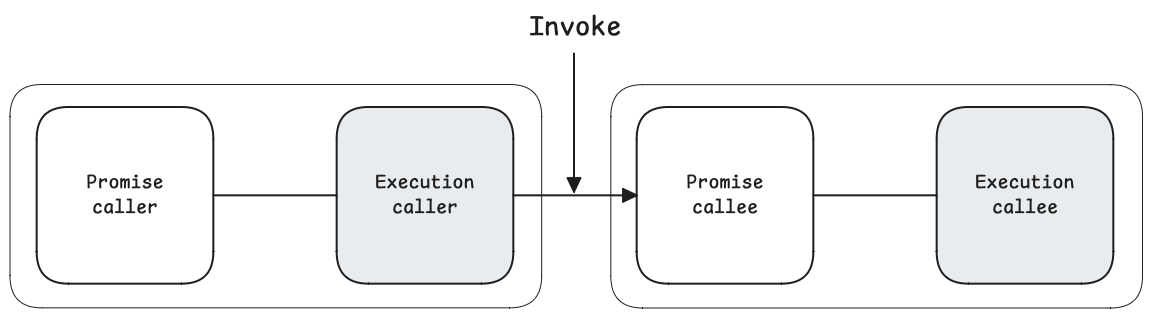

Invoke (Flow)

When an asynchronous execution, represented by a promise, invokes an asynchronous function, another asynchronous execution represented by a promise is spawned, executing concurrently to the caller.

Await (Dependency)

The caller coordinates with the callee by awaiting the promise representing the callee. Awaiting a promise suspends the caller until the callee terminates and returns its value.

Example

Let’s explore an asynchronous implementation of factorial. Calculating the factorial is the canonical example for a recursive function but not for an asynchronous function because there is no concurrency to exploit. However, factorial is a great example to explore a simple, linear async call graph.

def factorial(n):

if n == 0:

return 1

else:

return n * (yield FunctionCall(factorial, n-1))In traditional async-await, this entire call graph unfolds on a single node—defining the concurrent structure of a computation but not the distributed structure.

Distributed Async Await

Distributed Async Await is an extension of async-await and adds distribution to the asynchronous call graph by introducing two kinds of invocations: a local invocation and a remote invocation.

A remote invocation partitions the call graph, creating subgraphs that can unfold on different nodes.

def factorial(n):

if n == 0:

return 1

else:

# Here 3 and 1

if n % 2 == 1:

return n * (yield LocalFunctionCall(factorial, n-1))

# Here 2

else:

return n * (yield RemoteFunctionCall(factorial, n-1))With remote invocations, subgraphs execute atomically (non-distributed) on a single node, while the entire graph is fragmented (distributed) across multiple nodes. This fragmentation defines the distributed structure of the computation.

The Distributed Async Call Graph

The concurrent and distributed structures constrains how execution unfolds but don’t determine how execution unfolds. The runtime determines the actual ordering and distribution of executions with the constraints.

For instance, according to above illustration, we know that f(1) and f(0) execute on the same node, with f(1) terminating after f(0). However, we can’t say if f(3) and f(2) will run on the same node as f(1) and f(0)—They may run on a different node.

That is defined by a mapping of the logical computation onto the physical computation

Conclusion

In summary, Distributed Async Await extends async-await preserving concurrency while adding distribution to the structure of the call graph. Having that structure in place allows us to develop a scalable and reliable runtime executing the call graph.

Happy Reading